Speech analysis of teaching assistant interventions in small group collaborative problem solving with undergraduate engineering students

Published in British Journal of Educational Technology, 2024

This descriptive study focuses on usingvoice activity detection (VAD) algorithms to extractstudent speech data in order to better understand thecollaboration of small group work and the impact ofteaching assistant (TA) interventions in undergradu-ate engineering discussion sections. Audio data wererecorded from individual students wearing head-mounted noise-cancelling microphones. Video data ofeach student group were manually coded for collabo-rative behaviours (eg, group task relatedness, groupverbal interaction and group talk content) of studentsand TA–student interactions. The analysis includes in-formation about the turn taking, overall speech durationpatterns and amounts of overlapping speech observedboth when TAs were intervening with groups and whenthey were not. We found that TAs very rarely providedexplicit support regarding collaboration. Key speechmetrics, such as amount of turn overlap and maximumturn duration, revealed important information about thenature of student small group discussions and TA inter-ventions. TA interactions during small group collabo-ration are complex and require nuanced treatmentswhen considering the design of supportive tools.

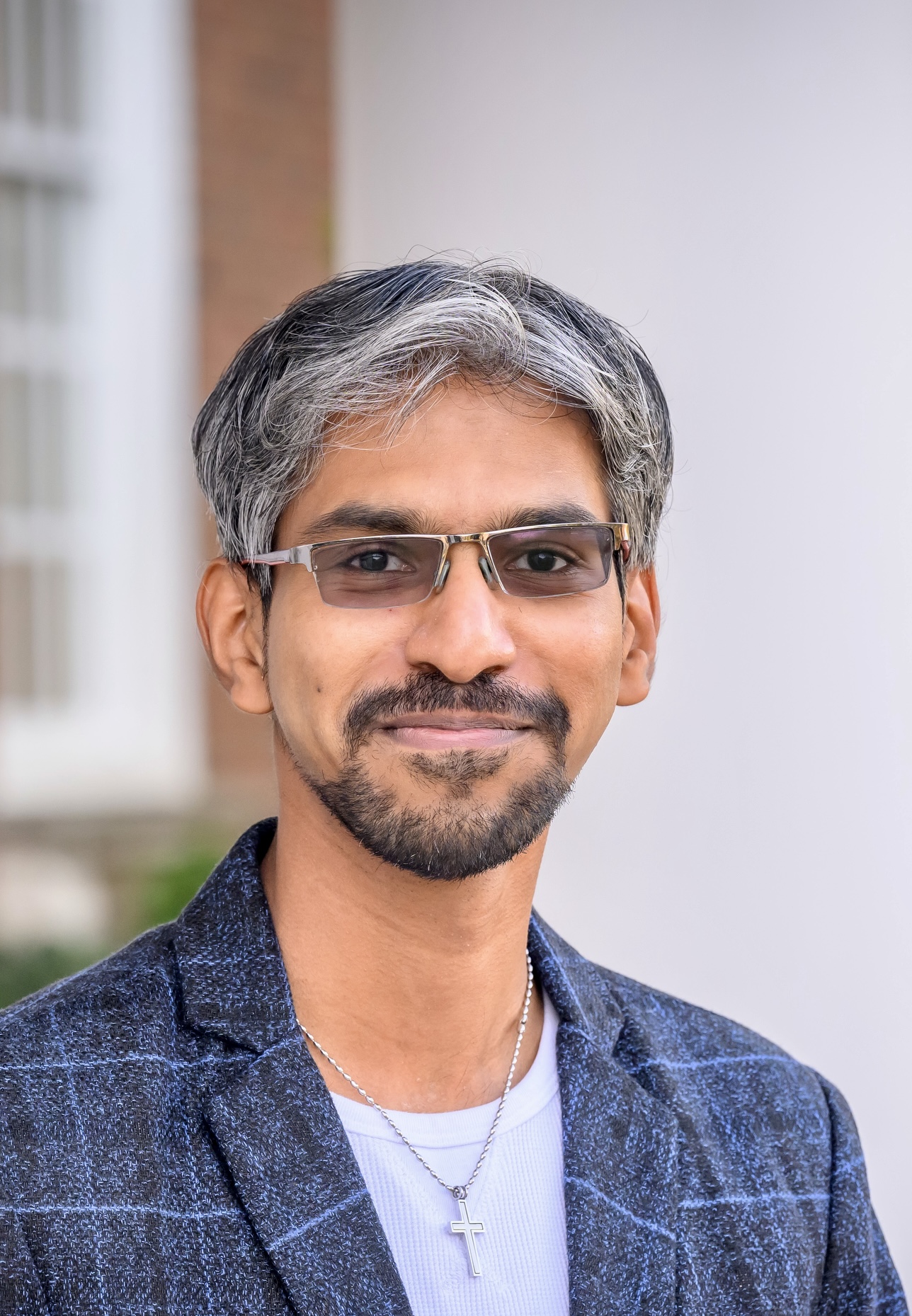

Recommended citation: C. M. D’Angelo and R. J. Rajarathinam, ‘Speech analysis of teaching assistant interventions in small group collaborative problem solving with undergraduate engineering students’, British Journal of Educational Technology, vol. 55, no. 4, pp. 1583–1601, 2024.

Download Paper